I have been working with Elastic Cloud to achieve this, using a .NET 6 web API example solution. This solution uses an Azure Event Hub to store logs, which Elastic Cloud pulls from. I'm also sending trace data separately, and ensuring the trace.id and transaction.id fields exist on every log so we can correlate traces with logs to provide a single view of transactions in Elastic Cloud APM. It is the trace.id and transaction.id fields that are the key to this.

Nuget packages required

Elastic.Apm.NetCoreAll - gives us auto instrumentation of trace data into Elastic Cloud's APM server.

Microsoft.Azure.EventHubs (deprecated, but a dependency to the UKHO package) - for connecting to and working with Azure Event Hubs.

UKHO.Logging.EventHubLogProvider - provides a logging sink for Microsoft.Extensions.Logging.Abstractions. Logs are sent to Azure Event Hub as a JSON message. Using this so my example would be relevant to where I work!

Configuration values

I'm using Azure Key Vault and an appsettings.json config file in this PoC to pull in configuration settings required in this example app.

Configuring the logging set up - Program.cs

This line specifies we want to send trace information to Elastic Cloud's APM server. That's all we need to register the service in Elastic APM and record trace information against each method:

Implementation

Elastic APM relies on transaction.id and trace.id fields existing on log messages to correlate trace data with logs.

As we are not automatically sending trace.id and transaction.id values with each of our logs, we have to use manual log correlation to add them as properties on our log messages.

As the below example shows, we can use the Elastic.Apm.Agent.Tracer.CurrentTransaction property (from the Elastic.APM Nuget mentioned before) anywhere in code to access the currently active transaction, and add the trace.id and transaction.id properties to our logs:

Now as part of the JSON that makes up the "message" field on our log document, we will have trace.id and transaction.id:

However, before we can correlate these logs to our APM traces, we have to do further processing in Elastic using Filebeat's processors.

Configure Event Hub integration in Fleet

We will assume the integration to pull logs from an Azure Event Hub is already set up in Elastic Cloud via Fleet and Elastic Agent, as described here. We need to add two processors to this configuration to pull out the trace.id and transaction.id values into dedicated fields. These are set under the advanced options for the integration, using YAML.

decode_json_fields

This is the first processor we will use. It decodes fields containing JSON strings and replaces the strings with valid JSON objects. Add this to the Processors field under advanced settings for the Event Hub integration as follows:

So we're instructing the decode_json_fields processor to decode the message field and replace the strings with valid JSON objects. We could add a new field name to the "target" option, but we're leaving this blank so the processor decodes and updates the message field.

rename

Our message field now has valid JSON objects, but given how nested transaction.id and trace.id were inside the message field, they are named as:

- Properties.transaction.id

- Properties.trace.id

We can use the rename processor to rename trace.id and transaction.id to what we want.

That's all the processors we need, so save the configuration changes to the agent policy.

View in Kibana

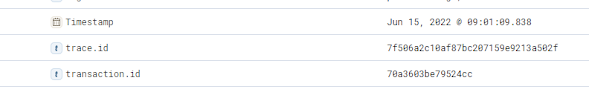

Now in Kibana when we view a log that has been ingested after our Event Hub integration config changes, we will see the trace.id and transaction.id pulled out into dedicated fields:

This means that Elastic APM can now correlate APM traces with our logs.

View in APM

When we view a transaction that contains logging in APM, we can now see all the trace information and the log messages in one view :)

Logging middleware

As a further demonstration, this project also contains logging middleware to log each request made against the API, where we are adding the transaction.id and trace.id to the log message each time: